Cowichan Lake Cuththroat Trout Exploitation Analysis 2022

The suggested citation for this analytic appendix is:

Dalgarno, S. & Thorley, J.L. (2023) Cowichan Lake Cuththroat Trout Exploitation Analysis 2022. A Poisson Consulting Analytic Appendix. URL: https://www.poissonconsulting.ca/f/86098464.

Background

Cowichan Lake supports an important Cutthroat Trout fishery. To provide information on the natural and fishing mortality, Cutthroat Trout were caught by angling and tagged with acoustic transmitters and/or a $100 reward tag. Some acoustic transmitters were equipped with depth and temperature sensors.

A fixed receiver array was deployed in the lake to monitor fish movements and location year-round. Additional fixed receivers were deployed during the spawning season at known spawning tributaries. A lake census was completed 4 times per year using mobile receivers.

The objectives of this analysis are to:

- Estimate fishing and natural mortality.

- Estimate annual variation in fishing and natural mortality.

- Estimate seasonal variation in fishing and natural mortality.

- Estimate effect of handling on natural mortality.

- Estimate variation in fishing and natural mortality by fork length.

Data Preparation

The data were provided to Poisson Consulting Ltd. by Aswea Porter following extensive data screening.

Statistical Analysis

Model parameters were estimated using Bayesian methods. The estimates were produced using JAGS (Plummer 2003). For additional information on Bayesian estimation the reader is referred to McElreath (2020).

Unless stated otherwise, the Bayesian analyses used weakly informative normal and half-normal prior distributions (Gelman, Simpson, and Betancourt 2017). The posterior distributions were estimated from 1500 Markov Chain Monte Carlo (MCMC) samples thinned from the second halves of 3 chains (Kery and Schaub 2011, 38–40). Model convergence was confirmed by ensuring that the potential scale reduction factor \(\hat{R} \leq 1.05\) (Kery and Schaub 2011, 40) and the effective sample size (Brooks et al. 2011) \(\textrm{ESS} \geq 150\) for each of the monitored parameters (Kery and Schaub 2011, 61).

The parameters are summarised in terms of the point estimate, lower and upper 95% credible limits (CLs) and the surprisal s-value (Greenland 2019). The estimate is the median (50th percentile) of the MCMC samples while the 95% CLs are the 2.5th and 97.5th percentiles. The s-value indicates how surprising it would be to discover that the true value of the parameter is in the opposite direction to the estimate (Greenland 2019). An s-value of \(>\) 4.3 bits, which is equivalent to a significant p-value \(<\) 0.05 (Kery and Schaub 2011; Greenland and Poole 2013), indicates that the surprise would be equivalent to throwing at least 4.3 heads in a row.

The condition that parameters describing the effects of secondary (nuisance) explanatory variable(s) have significant p-values was used as a model selection heuristic (Kery and Schaub 2011). Based on a similar argument, the condition that random effects have a standard deviation with a lower 95% CL \(>\) 5% of the estimate was used as an additional model selection heuristic. Primary explanatory variables are evaluated based on their estimated effect sizes with 95% CLs (Bradford, Korman, and Higgins 2005)

Model adequacy was assessed via posterior predictive checks (Kery and Schaub 2011). More specifically, the number of zeros and the first four central moments (mean, variance, skewness and kurtosis) for the deviance residuals were compared to the expected values by simulating new residuals. In this context the s-value indicates how surprising each observed metric is given the estimated posterior probability distribution for the residual variation.

The results are displayed graphically by plotting the modeled relationships between particular variables and the response(s) with the remaining variables held constant. In general, continuous and discrete fixed variables are held constant at their mean and first level values, respectively, while random variables are held constant at their typical values (expected values of the underlying hyperdistributions) (Kery and Schaub 2011, 77–82).

The analyses were implemented using R version 4.2.2

(R Core Team 2021) and the

mbr family of packages.

Model Descriptions

Survival

The natural mortality and recapture probability were estimated using a Bayesian individual state-space survival model (Thorley and Andrusak 2017) with monthly intervals. The survival model incorporated natural and handling mortality, acoustic detections and movements from a fixed receiver array and quarterly lake census, vertical movements from acoustic tags equipped with depth sensors, FLOY tag loss, recapture, reporting and release.

The expected fork length at monthly period \(t\) was estimated from length

at capture (\(L_{i,fi}\)) plus the length increment expected under a Von

Bertalanffy Growth Curve (Walters and Martell 2004)

\[L_{i,t} = L_{i,fi} + (L_{∞} − L_{i,fi} )(1 − e^{(−\frac{1}{12} \cdot k(t −fi))})\]

where \(fi\) is the period at capture and \(\frac{1}{12}\) in the exponent

adjusts for the fact that there are 12 periods per year.Linf was fixed

to a biologically plausible value of 775 mm based on prior information

(personal communication, R. Ptolemy); k of 0.23 was estimated from 88

aged Cutthroat Trout in Cowichan Lake (personal communication, Brendan

Andersen).

In addition to assumptions 1, 4 to 10, and 13 in Thorley and Andrusak (2017), the survival model assumes that:

- The effect of handling mortality is restricted to the first two monthly periods after initial capture or recapture.

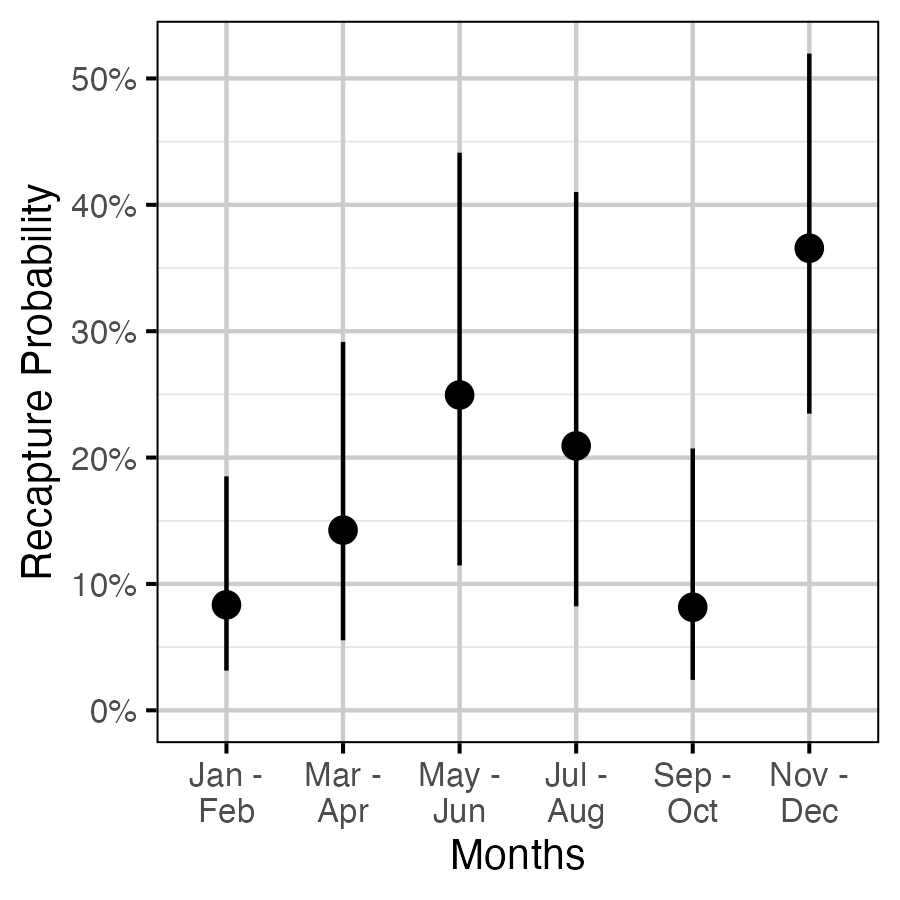

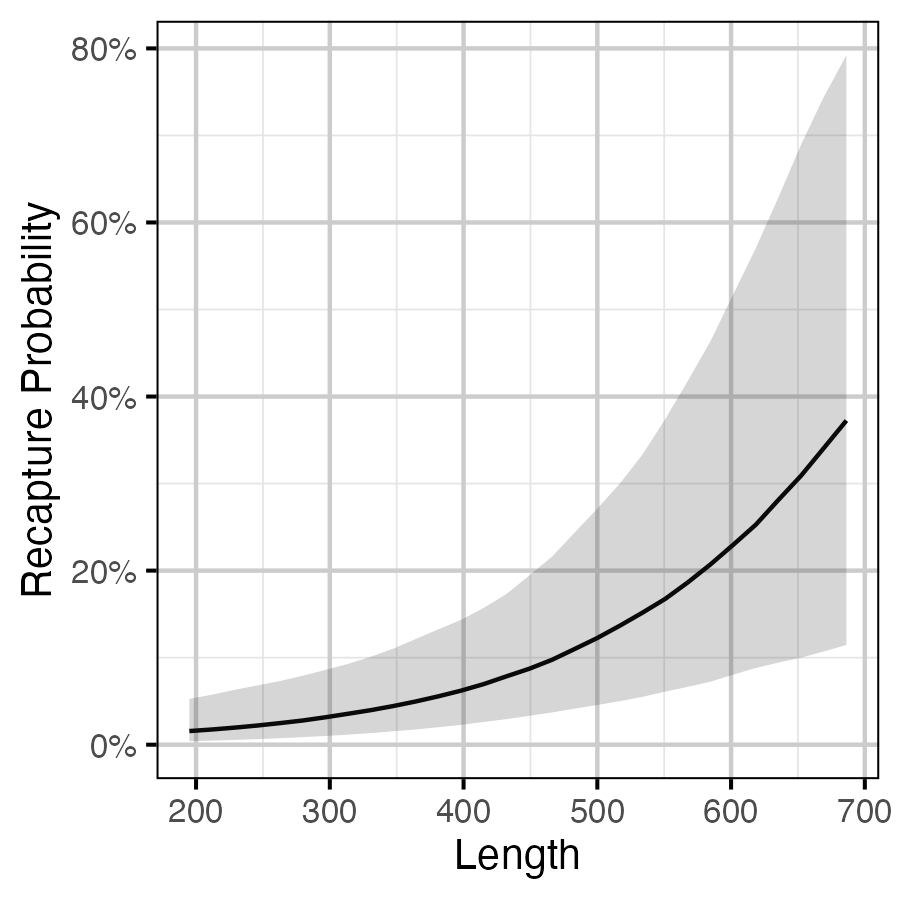

- Recapture probability varies by bi-monthly period and fork length.

- Natural mortality varies by season, study year and size class.

- The probability of detection depends on whether a fish is alive or has died near or far from a receiver and whether a census has occurred.

- The monthly interval probability of FLOY tag loss is 0.001.

- All recaptured fish that are released have their FLOY tags removed.

- Reporting of a recaptured fish with a FLOY tag is likely greater

than 90%.

- Survival of fish tagged with a FLOY tag only is the same as those

tagged with acoustic tags and a FLOY tag.

- Acoustic tags are functional over their estimated battery lifespan and are not shed.

Seasons are defined as winter (December-February), spring (March-May), summer (June-August), and fall (September-November).

Study years span from October 01 to September 30 of the following year (i.e., study year 1 is 2019-10-01 to 2020-09-30). This was used instead of calendar year to ensure that each year group includes all months.

Size classes are defined as small (\(\le\) 350 mm), medium (350 \(-\) 500 mm) and large (> 500 mm).

Preliminary analyses indicated lack of support for variation in recapture probability by study year.

We assumed that the monthly interval probability of FLOY tag loss was 0.001 (0.012 annual probability) based on tag loss estimates from double-tagged Lake Trout in Horse Lake.

Preliminary attempts to estimate tag loss in the model using an uninformative prior confirmed that this assumption was consistent with the available information, although we had to fix tag loss in the model to avoid poor convergence.

Model Templates

Survival

.model{

bMortality ~ dnorm(-3, 3^-2)

bMortalityHandling ~ dnorm(0, 2^-2)

bMonthsHandling <- 2

bDetectedAlive ~ dnorm(0, 2^-2)

bDetectedAliveCensus ~ dnorm(0, 2^-2)

bDieNear ~ dbeta(1, 1)

bDetectedDeadNear ~ dnorm(1, 1^-2)

bDetectedDeadNearCensus ~ dnorm(0, 2^-2)

bDetectedDeadFar ~ dnorm(-1, 1^-2)

bDetectedDeadFarCensus ~ dnorm(0, 2^-2)

bMovedH ~ dbeta(1, 1)

bMovedV ~ dbeta(1, 1)

bRecaptured ~ dnorm(0, 2^-2)

bReported ~ dbeta(1, 1)

bReleased ~ dbeta(1, 1)

# mode of 0.001

bTagLoss <- 0.001

bRecapturedLength ~ dnorm(0, 2^-2)

sMortalitySizeSeason ~ dexp(1)

for (i in 1:nSize){

for(j in 1:nSeason){

bMortalitySizeSeason[i,j] ~ dnorm(0, sMortalitySizeSeason^-2)

}

}

bMortalitySeason[1] <- 0

for (i in 2:nSeason) {

bMortalitySeason[i] ~ dnorm(0, 2^-2)

}

bMortalitySize[1] <- 0

for (i in 2:nSize) {

bMortalitySize[i] ~ dnorm(0, 2^-2)

}

bMortalityAnnual[1] <- 0

for (i in 2:nAnnual) {

bMortalityAnnual[i] ~ dnorm(0, 2^-2)

}

bRecapturedBiMonth[1] <- 0

for (i in 2:nBiMonth) {

bRecapturedBiMonth[i] ~ dnorm(0, 2^-2)

}

for (i in 1:nCapture){

eMortalityHandling[i, PeriodCapture[i]] <- ilogit(bMortalityHandling)

eMonthsSinceCapture[i,PeriodCapture[i]] <- 1-Recaptured[i,PeriodCapture[i]]

logit(eDetectedAlive[i, PeriodCapture[i]]) <- bDetectedAlive

eDieNear[i] ~ dbern(bDieNear)

logit(eDetectedDeadNear[i, PeriodCapture[i]]) <- bDetectedDeadNear

logit(eDetectedDeadFar[i, PeriodCapture[i]]) <- bDetectedDeadFar

eDetectedDead[i, PeriodCapture[i]] <- eDieNear[i] * eDetectedDeadNear[i, PeriodCapture[i]] +

(1 - eDieNear[i]) * eDetectedDeadFar[i, PeriodCapture[i]]

logit(eMortality[i,PeriodCapture[i]]) <- bMortality + bMortalitySeason[Season[PeriodCapture[i]]] + bMortalityAnnual[Annual[PeriodCapture[i]]] + bMortalityHandling + bMortalitySize[Size[i, PeriodCapture[i]]] + bMortalitySizeSeason[Size[i, PeriodCapture[i]], Season[PeriodCapture[i]]]

eMovedH[i,PeriodCapture[i]] <- bMovedH

eMovedV[i,PeriodCapture[i]] <- bMovedV

eDetected[i,PeriodCapture[i]] <- Alive[i,PeriodCapture[i]] * eDetectedAlive[i,PeriodCapture[i]] +

(1-Alive[i,PeriodCapture[i]]) * eDetectedDead[i,PeriodCapture[i]]

logit(eRecaptured[i,PeriodCapture[i]]) <- bRecaptured + bRecapturedBiMonth[BiMonth[PeriodCapture[i]]] + bRecapturedLength * Length[i, PeriodCapture[i]]

eReported[i,PeriodCapture[i]] <- bReported

eReleased[i,PeriodCapture[i]] <- bReleased

InLake[i,PeriodCapture[i]] <- 1

Alive[i,PeriodCapture[i]] ~ dbern((1-eMortality[i,PeriodCapture[i]]) * (1-eMortalityHandling[i,PeriodCapture[i]]))

TBarTag[i,PeriodCapture[i]] ~ dbern(1-bTagLoss)

Detected[i,PeriodCapture[i]] ~ dbern(Monitored[i,PeriodCapture[i]] * eDetected[i,PeriodCapture[i]])

MovedH[i,PeriodCapture[i]] ~ dbern(Alive[i,PeriodCapture[i]] * Detected[i,PeriodCapture[i]] * eMovedH[i,PeriodCapture[i]])

MovedV[i,PeriodCapture[i]] ~ dbern(Alive[i,PeriodCapture[i]] * Sensor[i,PeriodCapture[i]] * Detected[i,PeriodCapture[i]] * eMovedV[i,PeriodCapture[i]])

Recaptured[i,PeriodCapture[i]] ~ dbern(Alive[i,PeriodCapture[i]] * eRecaptured[i,PeriodCapture[i]])

Reported[i,PeriodCapture[i]] ~ dbern(Recaptured[i,PeriodCapture[i]] * eReported[i,PeriodCapture[i]] * TBarTag[i,PeriodCapture[i]])

Released[i,PeriodCapture[i]] ~ dbern(Recaptured[i,PeriodCapture[i]] * eReleased[i,PeriodCapture[i]])

for(j in (PeriodCapture[i]+1):nPeriod) {

eMortalityHandling[i,j] <- ilogit(bMortalityHandling) * step(bMonthsHandling - eMonthsSinceCapture[i,j-1] - 1)

eMonthsSinceCapture[i,j] <- (1-Recaptured[i,j]) * (eMonthsSinceCapture[i,j-1] + 1)

logit(eDetectedAlive[i,j]) <- bDetectedAlive + bDetectedAliveCensus * Census[j]

logit(eDetectedDeadNear[i,j]) <- bDetectedDeadNear + bDetectedDeadNearCensus * Census[j]

logit(eDetectedDeadFar[i,j]) <- bDetectedDeadFar + bDetectedDeadFarCensus * Census[j]

eDetectedDead[i,j] <- eDieNear[i] * eDetectedDeadNear[i,j] + (1 - eDieNear[i]) * eDetectedDeadFar[i,j]

eDetected[i,j] <- Alive[i,j] * eDetectedAlive[i,j] + (1-Alive[i,j]) * eDetectedDead[i,j]

logit(eMortality[i,j]) <- bMortality + bMortalityAnnual[Annual[j]] + bMortalitySize[Size[i,j]] + bMortalitySeason[Season[j]] + bMortalitySizeSeason[Size[i, j], Season[j]]

eMovedH[i,j] <- bMovedH

eMovedV[i,j] <- bMovedV

logit(eRecaptured[i,j]) <- bRecaptured + bRecapturedBiMonth[BiMonth[j]] + bRecapturedLength * Length[i,j]

eReported[i,j] <- bReported

eReleased[i,j] <- bReleased

InLake[i,j] ~ dbern(InLake[i,j-1] * ((1 - (Recaptured[i,j-1])) + Recaptured[i,j-1] * Released[i,j-1]))

Alive[i,j] ~ dbern(InLake[i,j] * Alive[i,j-1] * (1-eMortality[i,j]) * (1-eMortalityHandling[i,j]))

TBarTag[i,j] ~ dbern(TBarTag[i,j-1] * (1-Recaptured[i,j-1]) * (1-bTagLoss))

Detected[i,j] ~ dbern(InLake[i,j] * Monitored[i,j] * eDetected[i,j])

MovedH[i,j] ~ dbern(Alive[i,j-1] * Detected[i,j] * eMovedH[i,j])

MovedV[i,j] ~ dbern(Alive[i,j] * Sensor[i,j] * Detected[i,j] * eMovedV[i,j])

Recaptured[i,j] ~ dbern(Alive[i,j] * eRecaptured[i,j])

Reported[i,j] ~ dbern(Recaptured[i,j] * eReported[i,j] * TBarTag[i,j])

Released[i,j] ~ dbern(Recaptured[i,j] * eReleased[i,j])

}

}Block 1. Survival model description.

Results

Tables

Survival

Table 1. Parameter descriptions.

| Parameter | Description |

|---|---|

bDetectedAliveCensus |

Effect of a census on bDetectedAliveCensus |

bDetectedAlive |

Log odds probability of being detected if alive |

bDetectedDeadFarCensus |

Effect of a census on bDetectedDeadFar |

bDetectedDeadFar |

Log odds probability of being detected if dead far from a fixed receiver |

bDetectedDeadNearCensus |

Effect of a census on bDetectedDeadNear |

bDetectedDeadNear |

Log odds probability of being detected if dead near to a fixed receiver |

bDieNear |

Probability of dying near to (vs far from) a fixed receiver |

bMonthsHandling |

The number of months for which handling affects mortality. |

bMortalityAnnual[i] |

Effect of ith study year on bMortality |

bMortalityHandling |

Log odds probability of dying after (re)capture in a monthly period. |

bMortalitySeason[i] |

Effect of ith season on bMortality |

bMortalitySizeSeason[i, j] |

Effect of the ith size class in the jth season

on bMortality |

bMortalitySize[i] |

Effect of ith size class on bMortality |

bMortality |

Log odds probability of dying of natural causes in a monthly period in the 1st season of the 1st study year |

bMovedH |

Probability of moving horizontally if alive and detected in a monthly period |

bMovedV |

Probability of moving vertically if alive and detected in a monthly period |

bRecapturedBiMonth[i] |

Effect of ith bi-monthly period on bRecaptured |

bRecapturedLength |

Effect of standardized fork length on bRecaptured |

bRecaptured |

Log odds probability of being recaptured if alive in a monthly period |

bReleased |

Probability of being released if recaptured |

bReported |

Probability of being reported if recaptured with a FLOY tag |

bTagLoss |

Probability of shedding a FLOY tag in a monthly period |

sMortalitySizeSeason |

SD of bMortalitySizeSeason |

Table 2. Model coefficients.

| term | estimate | lower | upper | svalue |

|---|---|---|---|---|

| bDetectedAlive | 1.5124443 | 1.3521088 | 1.6879880 | 10.5517083 |

| bDetectedAliveCensus | 0.5267324 | 0.1684182 | 0.9184323 | 7.7443533 |

| bDetectedDeadFar | -4.7349605 | -5.5433535 | -4.0601915 | 10.5517083 |

| bDetectedDeadFarCensus | 1.4358142 | 0.4359574 | 2.4678576 | 6.8512685 |

| bDetectedDeadNear | 3.4446875 | 2.6664792 | 4.3794141 | 10.5517083 |

| bDetectedDeadNearCensus | 0.4517294 | -1.2867801 | 2.7216166 | 0.6194935 |

| bDieNear | 0.1597343 | 0.0912128 | 0.2425454 | 10.5517083 |

| bMortality | -2.7279720 | -3.6951785 | -1.8489400 | 10.5517083 |

| bMortalityAnnual[2] | -0.5207410 | -1.1007187 | 0.0692804 | 3.6328450 |

| bMortalityAnnual[3] | -0.0110849 | -0.5288290 | 0.5469936 | 0.0350233 |

| bMortalityHandling | -3.6260900 | -4.8068634 | -2.8680559 | 10.5517083 |

| bMortalitySeason[2] | 0.8397951 | -0.0422713 | 1.9211833 | 4.0439136 |

| bMortalitySeason[3] | 0.6079954 | -0.4287597 | 1.6583177 | 2.1551035 |

| bMortalitySeason[4] | -1.1790925 | -3.2256364 | 0.2205870 | 3.2943204 |

| bMortalitySize[2] | -0.4083766 | -1.3649302 | 0.5383783 | 1.5321175 |

| bMortalitySize[3] | 0.5538940 | -0.6143962 | 1.5153268 | 1.8478047 |

| bMovedH | 0.7187808 | 0.6927041 | 0.7433001 | 10.5517083 |

| bMovedV | 0.5615130 | 0.4975645 | 0.6260394 | 10.5517083 |

| bRecaptured | -4.9210918 | -5.9278402 | -4.0624138 | 10.5517083 |

| bRecapturedBiMonth[2] | 0.5784220 | -0.6884153 | 1.8078003 | 1.5100491 |

| bRecapturedBiMonth[3] | 1.1962917 | 0.0821370 | 2.3686239 | 4.7188182 |

| bRecapturedBiMonth[4] | 0.9908608 | -0.3021691 | 2.1797669 | 3.1170800 |

| bRecapturedBiMonth[5] | -0.0224748 | -1.4487265 | 1.3648077 | 0.0330551 |

| bRecapturedBiMonth[6] | 1.6573679 | 0.6525127 | 2.8024925 | 10.5517083 |

| bRecapturedLength | 0.7445511 | 0.3506431 | 1.1605587 | 10.5517083 |

| bReleased | 0.4485633 | 0.3019657 | 0.6059783 | 10.5517083 |

| bReported | 0.9820359 | 0.9118694 | 0.9992893 | 10.5517083 |

| sMortalitySizeSeason | 0.3510984 | 0.0238554 | 1.1781297 | 10.5517083 |

Table 3. Model convergence.

| n | K | nchains | niters | nthin | ess | rhat | converged |

|---|---|---|---|---|---|---|---|

| 8917 | 28 | 3 | 500 | 75 | 433 | 1.051 | FALSE |

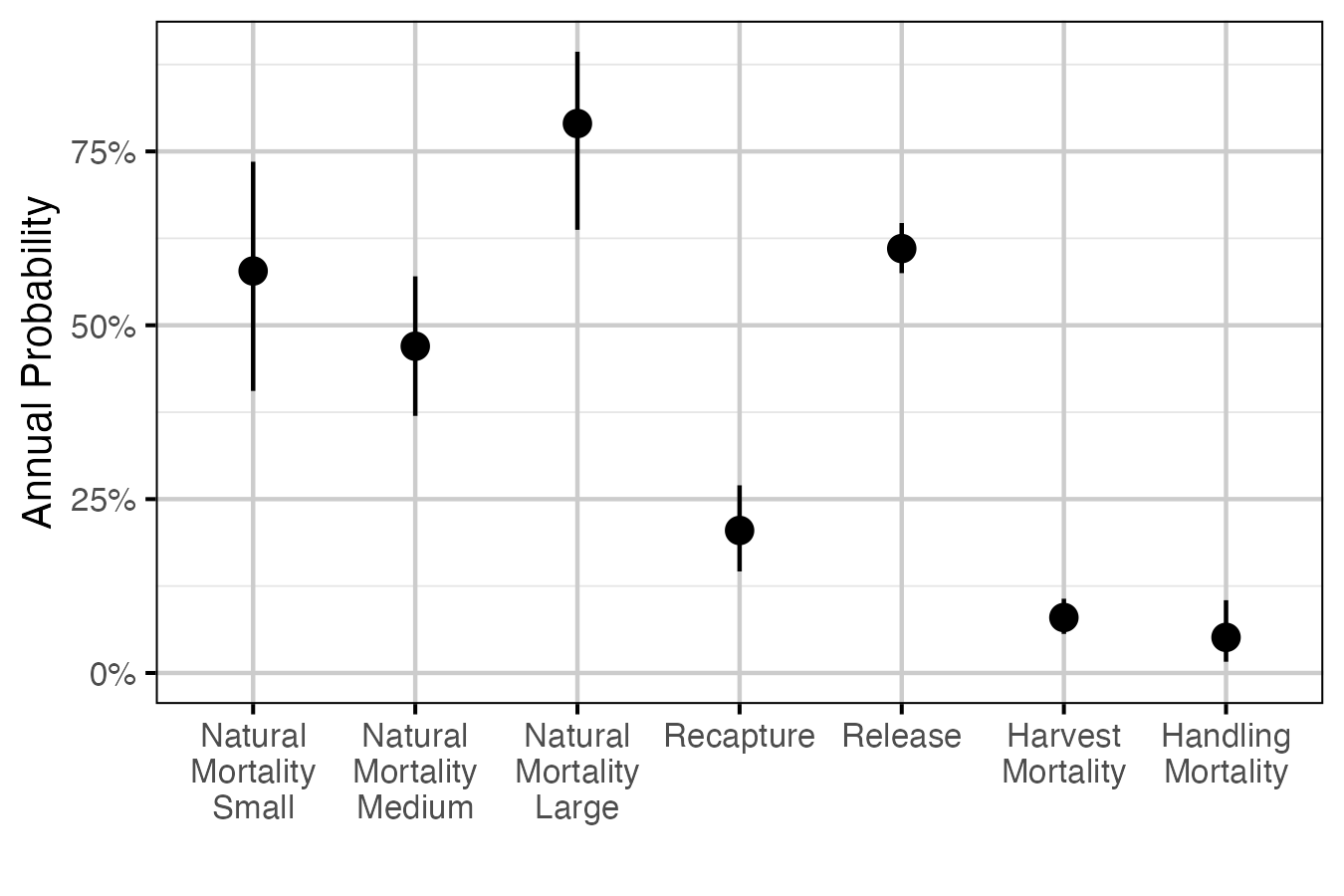

Table 4. The estimated annual interval probabilities (with 95% CIs). Natural mortalities for each size class are the average across study year. Handling mortality is the probability of mortality from handling, assuming that the effect of handling lasts for two months and occurs once in a year. Harvest mortality is the recapture probability multiplied by the probability of being harvested if recaptured, assuming that recapture can only occur once in a year. Release is the probability of being released if recaptured.

| derived quantity | estimate | lower | upper |

|---|---|---|---|

| Natural Mortality Small | 0.5780 | 0.4060 | 0.735 |

| Natural Mortality Medium | 0.4700 | 0.3700 | 0.570 |

| Natural Mortality Large | 0.7900 | 0.6370 | 0.893 |

| Recapture | 0.2050 | 0.1460 | 0.270 |

| Release | 0.6100 | 0.5750 | 0.647 |

| Harvest Mortality | 0.0797 | 0.0561 | 0.107 |

| Handling Mortality | 0.0512 | 0.0161 | 0.105 |

Figures

Growth

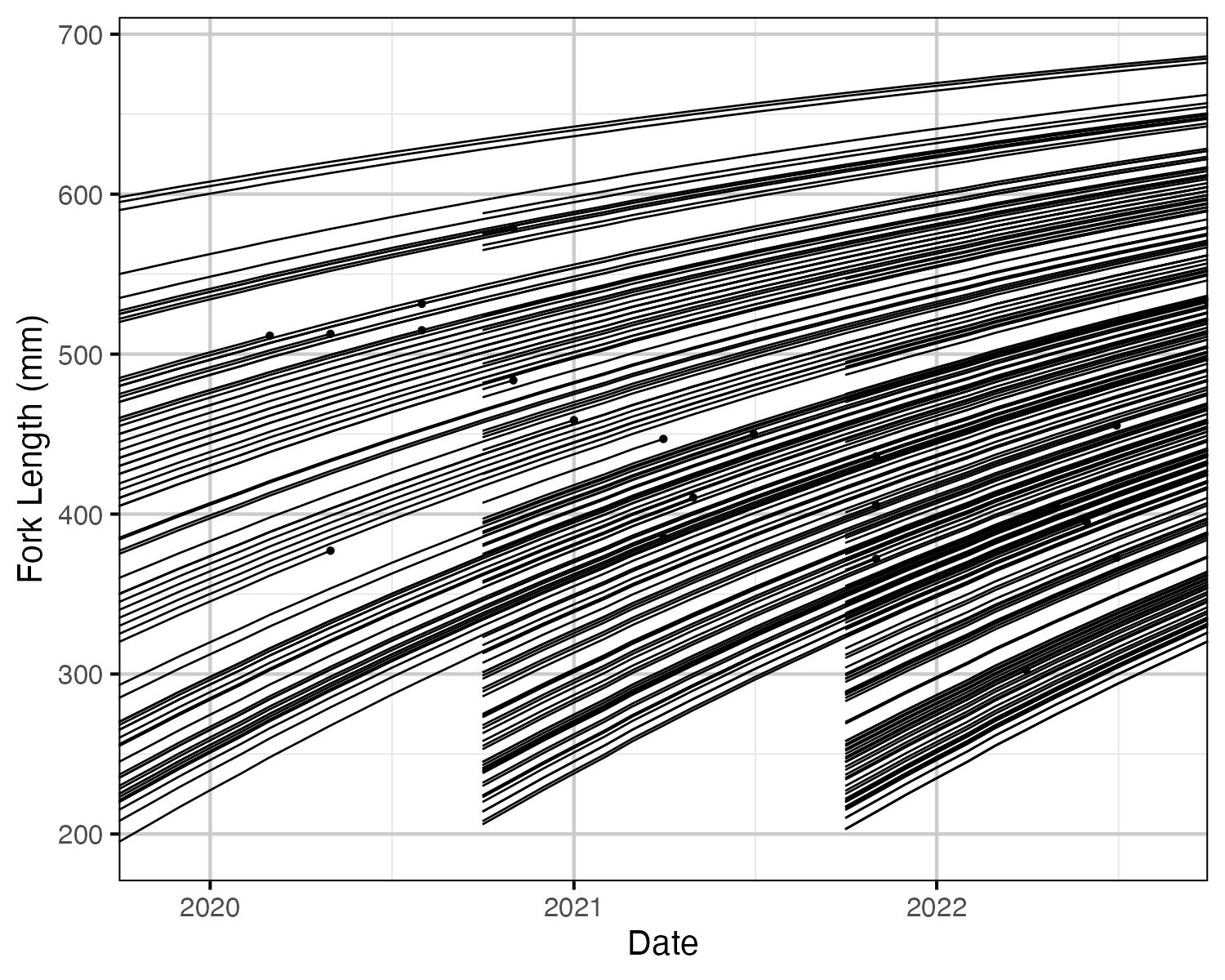

Figure 1. Estimated fork length for each fish by date. Points represent recapture and harvest.

Survival

Figure 2. The estimated annual interval probabilities (with 95% CIs). Natural mortalities for each size class are the average across study year. Handling mortality is the probability of mortality from handling, assuming that the effect of handling lasts for two months and occurs once in a year. Harvest mortality is the recapture probability multiplied by the probability of being harvested if recaptured, assuming that recapture can only occur once in a year. Release is the probability of being released if recaptured.

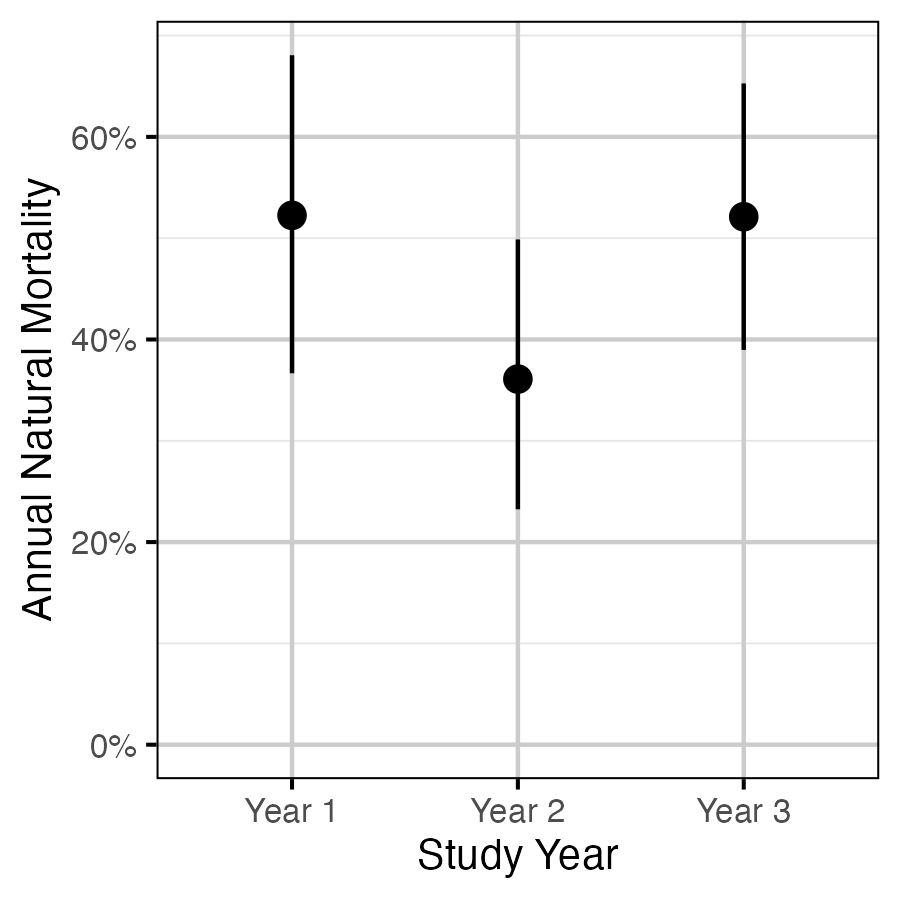

Figure 3. The estimated annual natural mortality by study year for a medium-sized fish (with 95% CIs).

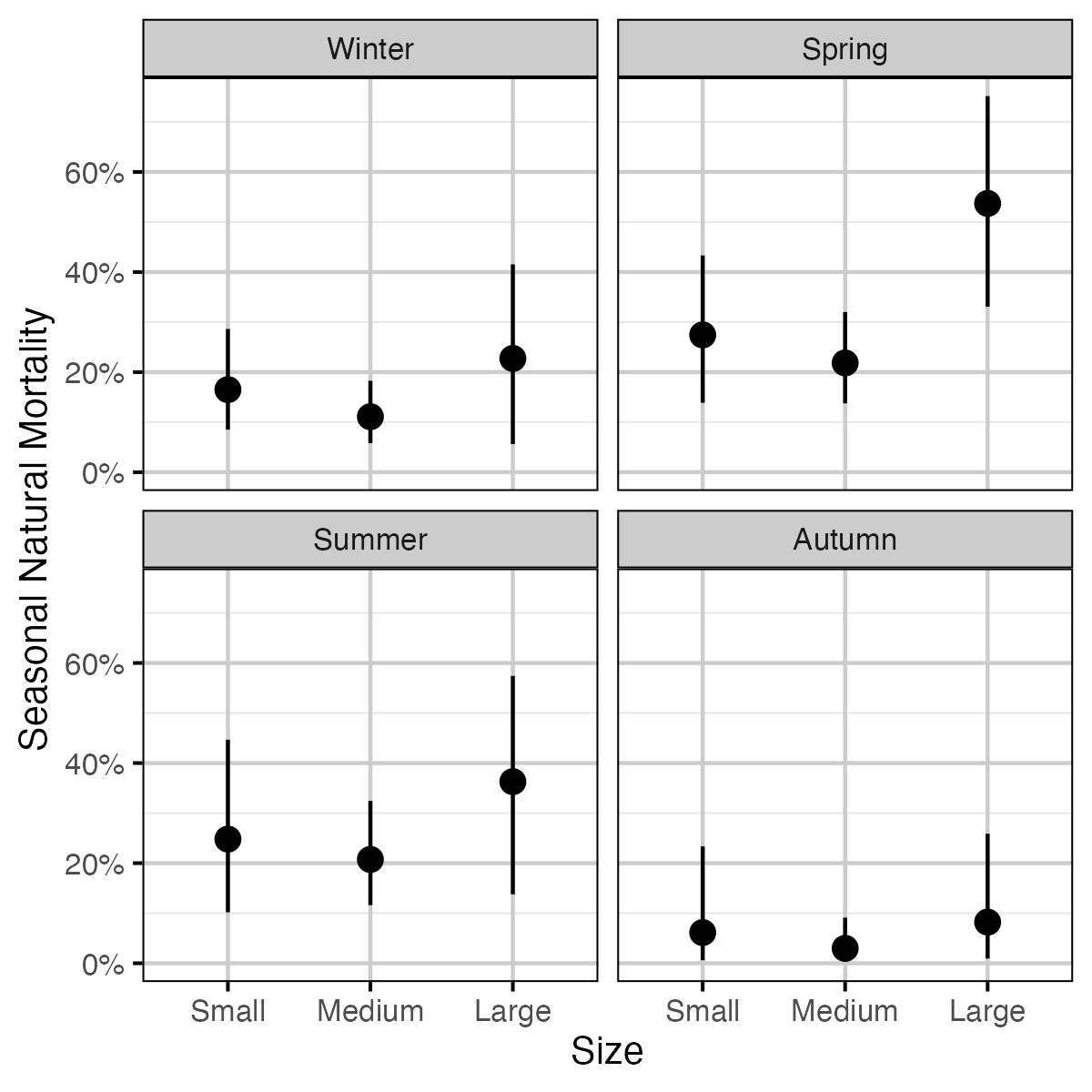

Figure 4. The estimated seasonal natural mortality by season and size, averaged across study year (with 95% CIs).

Figure 5. The estimated effect of bi-monthly period on recapture probability for an average length fish (with 95% CIs).

Figure 6. The estimated effect of fork length on recapture probability (with 95% CIs) in the first bi-monthly period (Jan - Feb).

Recommendations

- Data manipulation

- Separate

detectedintodetected_fixedanddetected_censusto allow the model to handle these separately.

- Separate

moved_hintomoved_h_fixedandmoved_h_censusto allow the model to handle these separately. - Assign movement to the period in which the movement began.

- Add a column indicating which receiver the tag was detected at

(with a set of rules to decide which receiver ‘wins’ if detected

at multiple receivers in a month).

- Add a column indicating whether the fish was detected passing through the PIT tag array in the Cowichan River.

- Separate

- Analyses

- Assess validity of the assumption of no migration out of the lake (i.e., from PIT tags or detection at receivers near or in Cowichan River).

- Estimate tag loss from double-tagged individuals.

- Estimate probability of tag removal following recapture and

release.

- Incorporate seasonal variation in growth into the model.

- Estimate effect of fork length on natural mortality using a mechanistic relationship if possible.

- Estimate probability of spawning as function of fork length.

- Estimate effect of spawning on natural mortality.

Acknowledgements

The organisations and individuals whose contributions have made this analytic appendix possible include:

- Kintama Research Services

- David Welch

- Aswea Porter

- Paul Winchell

- DFO

- Erin Rechisky

- MFLNRORD

- Brendan Andersen

- Mike McCulloch

- Jennifer Sibbald

- Scott Silvestri

- Jaro Szczot